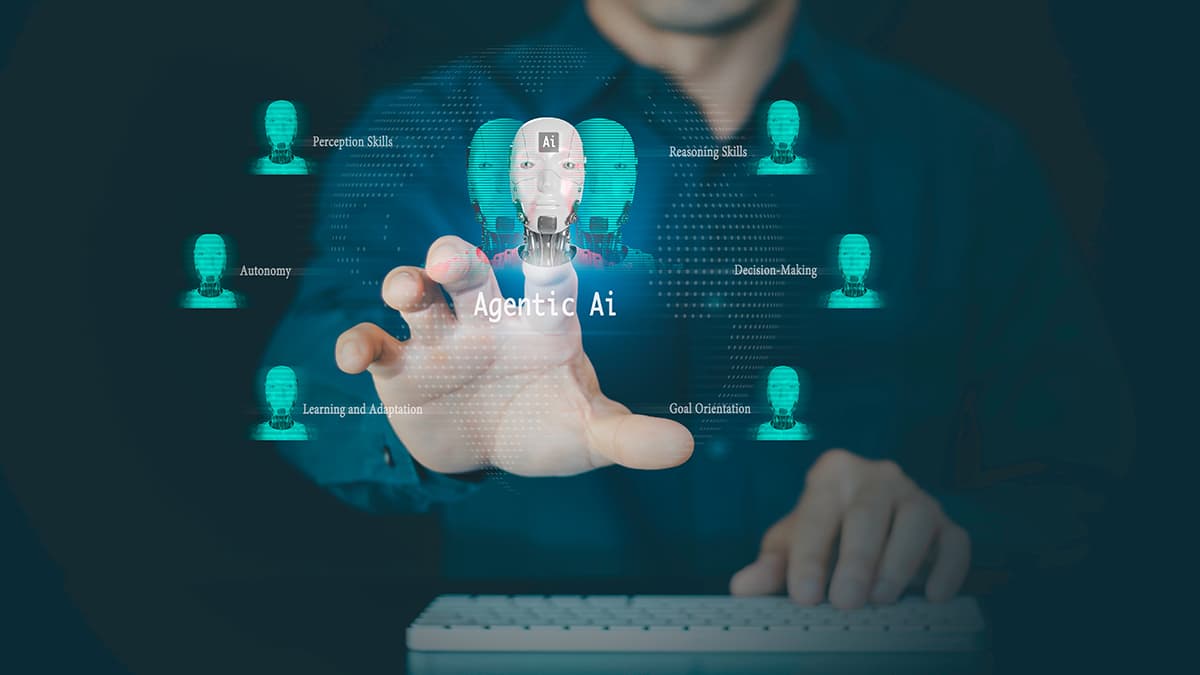

Unlike traditional single-mode systems, multimodal ML processes multiple types of input simultaneously – much like how humans naturally integrate various sensory inputs to comprehend their environment.

At its core, multimodal ML represents a significant departure from conventional machine learning systems that typically handle only one type of data, such as text or images in isolation. By combining various input types – text, images, audio, and more – these systems achieve a more comprehensive and nuanced understanding of their environment, mirroring human cognitive processes.

_1737374029.jpeg)

Key Applications and Capabilities

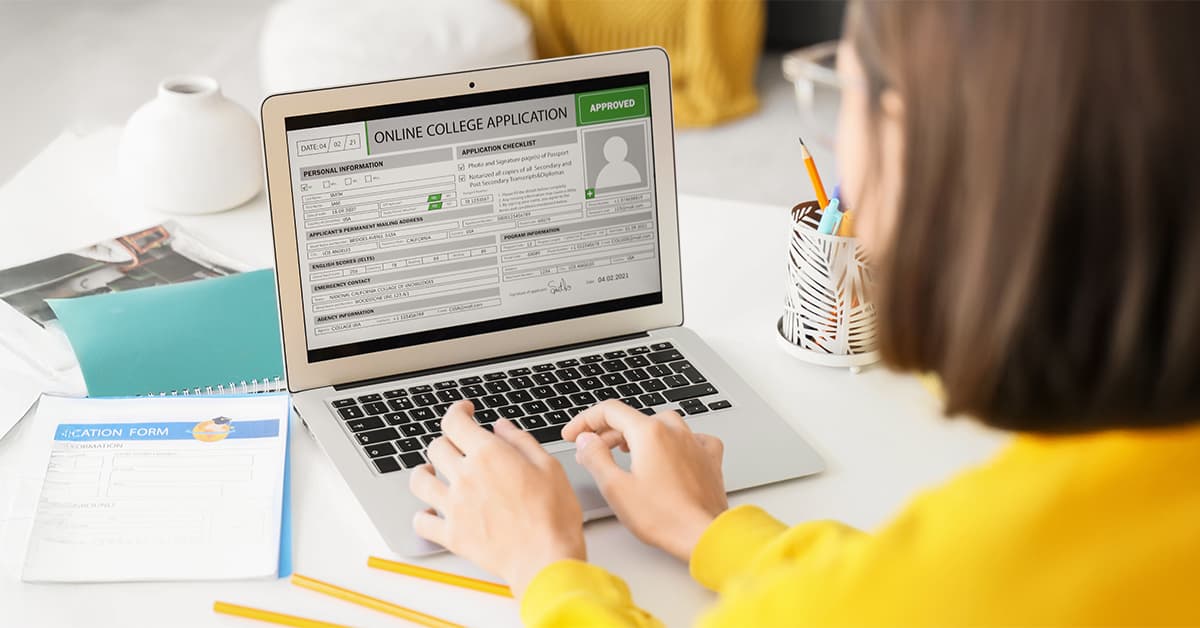

Visual Question Answering (VQA) and visual reasoning stand out as prime examples of multimodal ML's capabilities. These systems can analyze images and respond to queries about their content, demonstrating an understanding that goes beyond simple object recognition. For instance, a VQA system can not only identify objects in a scene but also infer relationships between them and answer complex questions about their arrangement or context.

Document Visual Question Answering (DocVQA) takes this technology into the practical realm of business and administration. By combining computer vision with natural language processing, DocVQA systems can "read" documents from images, understanding both their content and layout. This capability has profound implications for automated document processing and information extraction.

Perhaps one of the most exciting applications is image captioning and text-to-image generation. These technologies bridge the gap between visual and linguistic understanding in both directions. Image captioning systems can generate natural, descriptive text from images, while text-to-image generation creates visual content from written descriptions. This bidirectional capability opens up new possibilities for creative expression and communication.

Visual grounding represents another sophisticated application, enabling precise connections between language and specific parts of an image. This technology can understand and respond to specific queries about image elements, making it invaluable for applications ranging from educational tools to autonomous systems.

Practical Applications Across Industries

_1737374047.jpeg)

The impact of multimodal ML extends across numerous sectors. In healthcare, these systems can analyze medical images alongside patient records for more accurate diagnoses. In autonomous vehicles, they integrate visual data with sensor information for better navigation. In education, they create more engaging and interactive learning experiences by combining various forms of media.

Image-text retrieval systems are revolutionizing how we search and organize visual content. By understanding both the visual and textual aspects of information, these systems can create more accurate and context-aware search results, benefiting fields from digital asset management to e-commerce.

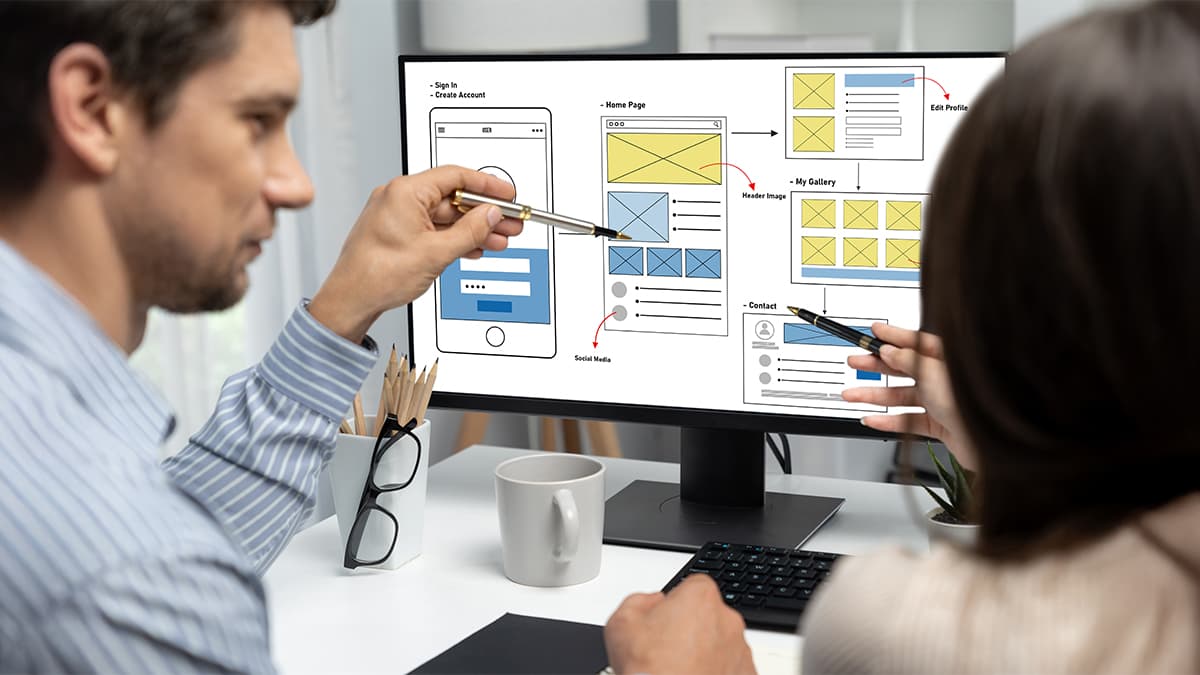

For organizations looking to implement multimodal ML, careful planning is essential. The first step is identifying specific use cases where multimodal processing adds genuine value. Not every application requires multiple modalities, and implementing these systems unnecessarily can lead to increased complexity and cost.

System capabilities must be carefully evaluated. Multimodal ML typically requires more computational resources than single-mode systems. Organizations need to ensure their infrastructure can support these demands while maintaining performance and reliability.

As we look ahead, multimodal ML is poised to become increasingly prevalent. The technology's ability to process and integrate different types of information makes it particularly well-suited for creating more natural and intuitive human-machine interactions. We're likely to see continued advancement in areas like emotional intelligence, where systems can interpret not just what is said, but how it's said, combining vocal tone, facial expressions, and text analysis.

As processing power increases and algorithms become more sophisticated, we can expect these systems to handle increasingly complex tasks with greater accuracy and efficiency.

At CSM, we work on mitigating issues around machine learning for companies that have begun adopting GenAI and traditional AI/ML applications. Talk to us today about your challenges: www.csm.tech/americas/contact-us

Want to start a project?

Get your Free ConsultationOur Recent Blog Posts

© 2026 CSM Tech Americas All Rights Reserved